We’ve been told, “The eyes are the window to the soul.” Well, windows work two ways. Our eyes are also our windows to the world. What we see and how we see it help determine how we move through the world. In other words, our vision helps guide our actions, including social behaviors. Now, a young Cold Spring Harbor Laboratory (CSHL) scientist has uncovered a major clue into how this works. He did it by building a special AI model of the common fruit fly brain.

CSHL Assistant Professor Benjamin Cowley developed the AI model in collaboration with the Pillow and Murthy neuroscience labs at Princeton University. The team honed their model through a technique they developed called “knockout training.” First, they recorded a male fruit fly’s courtship behavior—chasing and singing to a female. Next, they genetically silenced specific types of visual neurons in the male fly and trained their AI to detect any changes in behavior. By repeating this process with many different visual neuron types, they were able to get the AI to accurately predict how the real fruit fly would act in response to any sight of the female.

“We can actually predict neural activity computationally and ask how specific neurons contribute to behavior,” Cowley says. “This is something we couldn’t do before.”

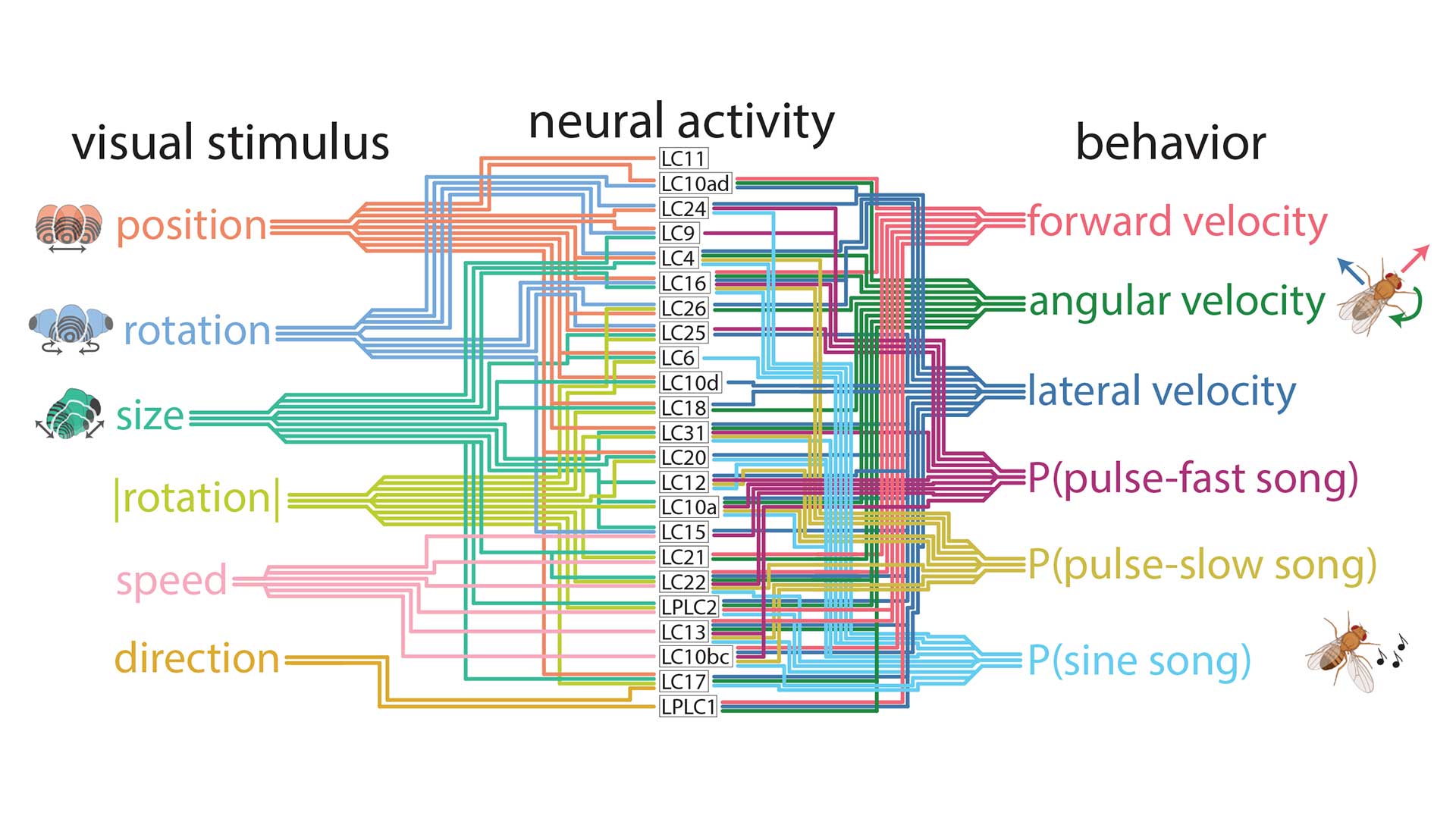

With their new AI, the team discovered that the fruit fly brain uses a “population code” to process visual data. Instead of one neuron type linking each visual feature to one action, as previously assumed, many combinations of neurons were needed to sculpt behavior. A chart of these neural pathways looks like an incredibly complex subway map and will take years to decipher. Still, it gets us where we need to go. It enables an AI model to predict how a real-life fruit fly will behave when presented with visual stimuli.

Does this mean AI could someday predict human behavior? Not so fast. Fruit fly brains contain about 100,000 neurons. The human brain has almost 100 billion. Referring to the subway map, Cowley says:

“This is what it’s like for the fruit fly. You can imagine what our visual system is like.”

Still, Cowley hopes the AI model will someday help us decode the computations underlying the human visual system. He says:

“This is going to be decades of work. But if we can figure this out, we’re ahead of the game. By learning [fly] computations, we can build a better artificial visual system. More importantly, we’re going to understand disorders of the visual system in much better detail.”

How much better? You’ll have to see it to believe it.

Written by: Samuel Diamond, Senior Communications Strategist | [email protected] | 516-367-5055

Funding

Starr Foundation, Simons Collaboration on the Global Brain, National Institutes of Health, NIH BRAIN Initiative, Howard Hughes Medical Institute, National Institute of Neurological Disorders and Stroke

Citation

Cowley, B., et al., “Mapping model units to visual neurons reveals population code for social behaviour”, Nature, May 22, 2024. DOI: 10.1038/s41586-024-07451-8

Principal Investigator

Benjamin Cowley

Assistant Professor

Ph.D., Carnegie Mellon University, 2018